OpenBioLink: A benchmark for automated hypothesis generation and link prediction in biomedicine

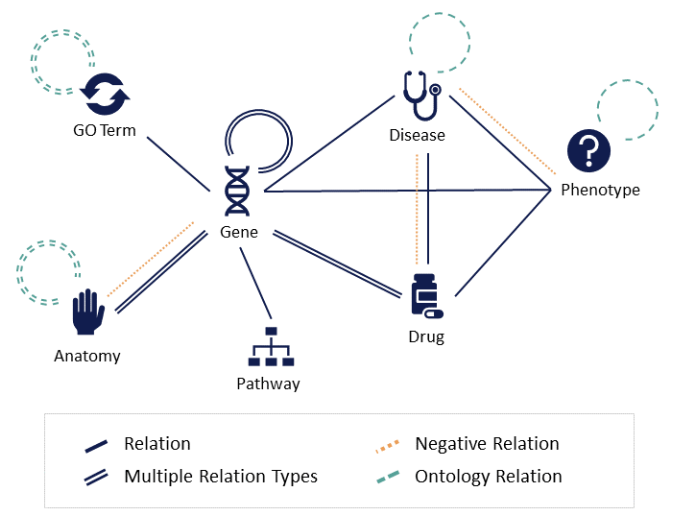

OpenBioLink is a resource and evaluation framework for evaluating link prediction models on heterogeneous biomedical graph data. It contains benchmark datasets as well as tools for creating custom benchmarks and training and evaluating models.

Peer reviewed version in the journal Bioinformatics (for citations)

The OpenBioLink benchmark aims to meet the following criteria:

- Openly available

- Large-scale

- Wide coverage of current biomedical knowledge and entity types

- Standardized, balanced train-test split

- Open-source code for benchmark dataset generation

- Open-source code for evaluation (independent of model)

- Integrating and differentiating multiple types of biological entities and relations (i.e., formalized as a heterogeneous graph)

- Minimized information leakage between train and test sets (e.g., avoid inclusion of trivially inferable relations in the test set)

- Coverage of true negative relations, where available

- Differentiating high-quality data from noisy, low-quality data

- Differentiating benchmarks for directed and undirected graphs in order to be applicable to a wide variety of link prediction methods

- Clearly defined release cycle with versions of the benchmark and public leaderboard