Whether benchmarking yields an adequate reflection of current AI capacities not only depends on the quality and validity of benchmark datasets but also on the properties of the metrics that are used to assess performance. As a first step in creating insights from the Intelligence Task Ontology, we analysed the prevalence of performance metrics currently used to measure progress in AI based on data covering 32209 reported performance results across 2298 distinct benchmark datasets.

Figure 1: Comparison of the number of benchmark datasets vs. the number of reported performance metrics per problem class.

To increase data quality and enable a thorough analysis, we conducted extensive manual curation of publicly available data on model performances and integrated the curated data with the ontology.

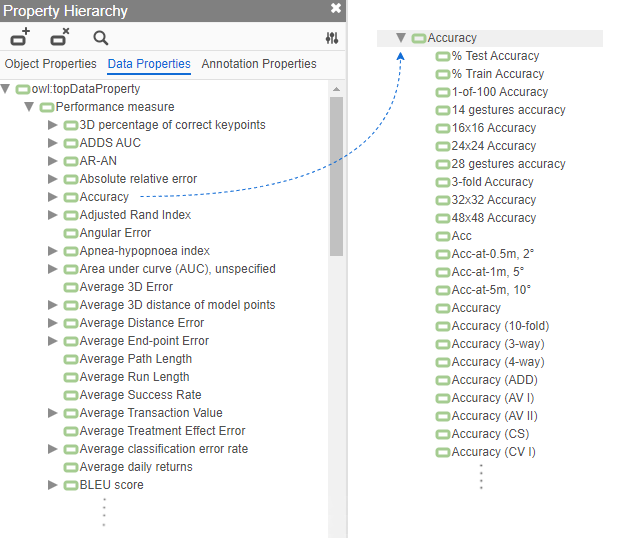

Figure 2: Property hierarchy after manual curation of the raw list of metrics. The left side of the image shows an excerpt of the list of top-level performance metrics; the right side shows an excerpt of the list of submetrics for the top-level metric ‘Accuracy’.

The results of our analysis suggested that the current use of performance metrics entails the risk of yielding an inaccurate reflection of current AI capacities due to the metrics’ properties, especially when used with imbalanced benchmark datasets.

🠆 Read the pre-print on arXiv.

Abstract

Comparing model performances on benchmark datasets is an integral part of measuring and driving progress in artificial intelligence. A model’s performance on a benchmark dataset is commonly assessed based on a single or a small set of performance metrics. While this enables quick comparisons, it may also entail the risk of inadequately reflecting model performance if the metric does not sufficiently cover all performance characteristics. Currently, it is unknown to what extent this might impact current benchmarking efforts. To address this question, we analysed the current landscape of performance metrics based on data covering 3867 machine learning model performance results from the web-based open platform ‘Papers with Code’. Our results suggest that the large majority of metrics currently used to evaluate classification AI benchmark tasks have properties that may result in an inadequate reflection of a classifiers’ performance, especially when used with imbalanced datasets. While alternative metrics that address problematic properties have been proposed, they are currently rarely applied as performance metrics in benchmarking tasks. Finally, we noticed that the reporting of metrics was partly inconsistent and partly unspecific, which may lead to ambiguities when comparing model performances.